Have you made some recent changes to the URL on your site? If so, you can easily update them with the latest feature in Google webmasters tool. The Fetch as Googlebot offers a better way to submit updated URL’s for indexing in Google. Underneath the list of tools, there is a link “Fetch as Googlebot” wherein you can enter a URL for your website, leaving off the domain name and click the “Fetch” button. Once the “Fetch” status gets successful, you can submit that page to the Google index by clicking “Submit to Index” button.

Steps-

- Go to Google Webmaster Tools and Login to your account.

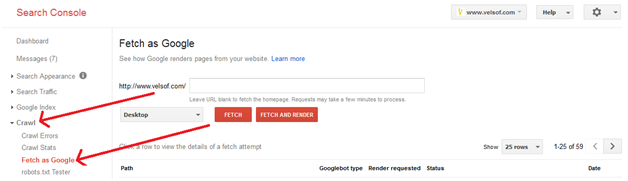

From the Google Webmaster Tools home screen, select the domain name, expand the Crawl menu and then click Fetch as Google link.

- Fetch as Google

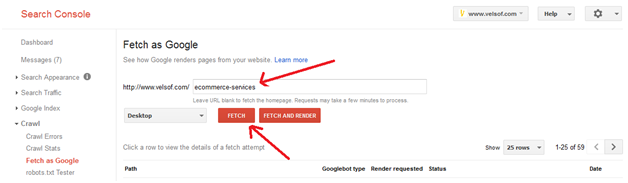

Here, you will be required to enter your page URL into the input field, leaving off the domain name. After this, click on FETCH button. The page gets displayed in a way as shown below-

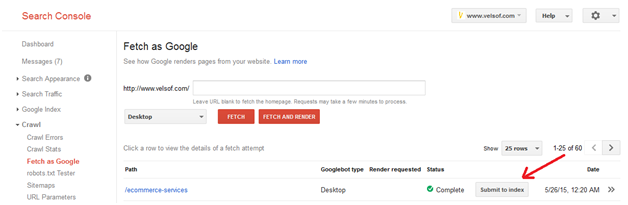

- Submit For Indexing

Once you see that the “Fetch Status” is successful, you can easily submit that page for indexing. Click on “Submit to Index” button for the further process.

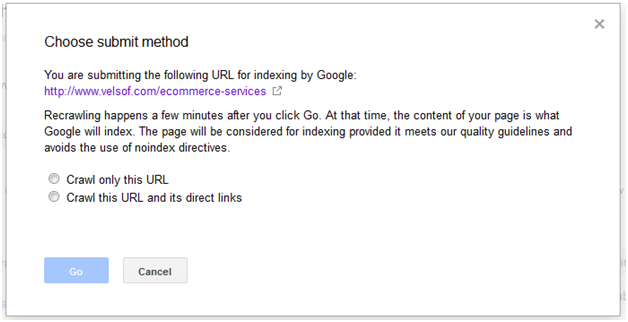

Here, you have a choice; you can either submit the URL itself or URL and other pages linked internally with that URL. A pop up will appear as shown below-

Select “Crawl only this URL” if your page has been recently updated. On choosing this, Google guarantees to index only the URL of that specific page.

Select “Crawl this URL and its direct links” if your site has been updated significantly.

By submitting the URL itself, you get limited to 50 URL submissions per week and if your submit URL’s with all pages linked, then the limit goes to 10 submissions per month.

Click OK. Now you will see a message that this URL has been linked and pages submitted to get indexed. This completes the submission process.

Google’s Inability to Crawl and Index Particular Pages

Google crawls pages only when it gets the permission from website owner. The permission is provided by Robots.txt file that is remaining on site’s server. Robots.txt file is formatted in such way

User-Agent: Googlebot

Disallow:/

This change will allow Googlebot to index the content of your site. But if you don’t want crawler to index your URL then use following format

User-Agent: Googlebot

Disallow: /http://example.com/example

The yellow highlighted URL will be disallowed by Googlebot to index and store in Google’s directory.

There are many other reasons that Googlebot stops indexing pages if it’s not being issued by robots.txt as-

- Your page may have spammy content

- Your page may have malware

- Your page may have materials that can harm a system

Conclusion-

Adding the “Fetch as Google tool” feature will give a new rise to your marketing efforts by allowing your page to get displayed in front of Google searches in a much faster way. If you want to achieve the search engine rankings you dream of, then prepare yourself to fetch your web page every time you get your website updated.